I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.

I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.

The world-wide-web has become a large ecosystem that reaches billions of users through information processing and sharing, and most of this information resides in pixels. Web-based services like YouTube and Flickr, and social networks such as Facebook have become more and more popular, allowing people to easily upload, share and annotate massive amounts of images and videos all over the web.

Vision and social media thus has recently become a very active inter-disciplinary research area, involving computer vision, multimedia, machine-learning, information retrieval, and data mining. This workshop aims to bring together leading researchers in the related fields to advocate and promote new research directions for problems involving vision and social media, such as large-scale visual content analysis, search and mining.

Our paper entitled “Enriching and Localizing Semantic Tags in Internet Videos” has been accepted by ACM Multimedia 2011.

Tagging of multimedia content is becoming more and more widespread as web 2.0 sites, like Flickr and Facebook for images, YouTube and Vimeo for videos, have popularized tagging functionalities among their users. These user-generated tags are used to retrieve multimedia content, and to ease browsing and exploration of media collections, e.g. using tag clouds. However, not all media are equally tagged by users: using the current browsers is easy to tag a single photo, and even tagging a part of a photo, like a face, has become common in sites like Flickr and Facebook; on the other hand tagging a video sequence is more complicated and time consuming, so that users just tend to tag the overall content of a video.

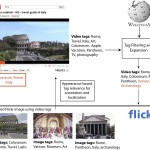

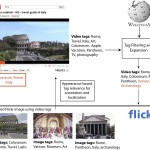

In this paper we present a system for automatic video annotation that increases the number of tags originally provided by users, and localizes them temporally, associating tags to shots. This approach exploits collective knowledge embedded in tags and Wikipedia, and visual similarity of keyframes and images uploaded to social sites like YouTube and Flickr. Our paper is now available online.

Our paper on “Tag suggestion and localization in user-generated videos based on social knowledge” won the best paper award at ACM SIGMM Workshop on Social Media (WSM’10) in conjunction with ACM Multimedia 2010.

Nowadays, almost any web site that provides means for sharing user-generated multimedia content, like Flickr, Facebook, YouTube and Vimeo, has tagging functionalities to let users annotate the material that they want to share. The tags are then used to retrieve the uploaded content, and to ease browsing and exploration of these collections, e.g. using tag clouds. However, while tagging a single image is straightforward, and sites like Flickr and Facebook allow also to tag easily portions of the uploaded photos, tagging a video sequence is more cumbersome, so that users just tend to tag the overall content of a video. While research on image tagging has received a considerable attention in the latest years, there are still very few works that address the problem of automatically assigning tags to videos, locating them temporally within the video sequence.

In this paper we present a system for video tag suggestion and temporal localization based on collective knowledge and visual similarity of frames. The algorithm suggests new tags that can be associated to a given keyframe exploiting the tags associated to videos and images uploaded to social sites like YouTube and Flickr and visual features. Our paper is now available online.

I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.

I am co-organizer (with Marco Bertini, Alex Berg and Cees Snoek) of the International Workshop on Web-scale Vision and Social Media, in conjunction with ECCV 2012.