I am co-organizing a special issue for the Computer Vision and Image Understanding journal on “Computer Vision and the Web”, together with Shih-Fu Chang (Columbia University), Gang Hua (Microsoft Research Asia), Thomas Mensink (Univ. of Amsterdam), Greg Mori (Simon Fraser Univ.) and Rahul Sukthankar (Google Research). You can see all the details on the call for papers.

I am co-organizing a special issue for the Computer Vision and Image Understanding journal on “Computer Vision and the Web”, together with Shih-Fu Chang (Columbia University), Gang Hua (Microsoft Research Asia), Thomas Mensink (Univ. of Amsterdam), Greg Mori (Simon Fraser Univ.) and Rahul Sukthankar (Google Research). You can see all the details on the call for papers.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.

The slides are available on this page.

I am co-organizing the 4th Int’l Workshop on Web-scale Vision and Social Media (VSM) at ECCV 2016, with Marco Bertini (Univ. Florence, Italy) and Thomas Mensink (Univ. Amsterdam, NL).

Website: https://sites.google.com/site/vsm2016eccv/

Vision and social media has recently become a very active inter-disciplinary research area, involving computer vision, multimedia, machine learning, and data mining. This workshop aims to bring together researchers in the related fields to promote new research directions for problems involving vision and social media, such as large-scale visual content analysis, search and mining.

Everything you wanted to know about image tagging, tag refinement and social image retrieval. Our paper has been (finally) accepted to ACM Computing Surveys! This is a titanic effort, by Xirong Li, Tiberio Uricchio, myself, Marco Bertini, Cees Snoek and Alberto Del Bimbo, to structure the growing literature in the field, understand the ingredients of the main works, clarify their connections and difference, and recognize their merits and limitations.

Everything you wanted to know about image tagging, tag refinement and social image retrieval. Our paper has been (finally) accepted to ACM Computing Surveys! This is a titanic effort, by Xirong Li, Tiberio Uricchio, myself, Marco Bertini, Cees Snoek and Alberto Del Bimbo, to structure the growing literature in the field, understand the ingredients of the main works, clarify their connections and difference, and recognize their merits and limitations.

A pre-print is available on arXiv and the source code is on GitHub.

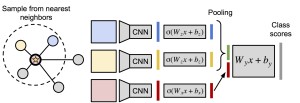

Our paper “Love Thy Neighbors: Image Annotation by Exploiting Image Metadata”, by J. Johnson*, L. Ballan* and L. Fei-Fei (* equal contribution), has been accepted to ICCV 2015. A pre-print is now available on arXiv.

Our paper “Love Thy Neighbors: Image Annotation by Exploiting Image Metadata”, by J. Johnson*, L. Ballan* and L. Fei-Fei (* equal contribution), has been accepted to ICCV 2015. A pre-print is now available on arXiv.

Some images that are difficult to recognize on their own may become more clear in the context of a neighborhood of related images with similar social-network metadata. We build on this intuition to improve multilabel image annotation. Our model uses image metadata nonparametrically to generate neighborhoods of related images using Jaccard similarities, then uses a deep neural network to blend visual information from the image and its neighbors.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at ACM MM 2015, based on our recent survey. Our tutorial focuses on challenges and solutions for content-based image retrieval in the context of online image sharing and tagging. We present a unified review on three closely linked problems: tag assignment, tag refinement, and tag-based image retrieval. We introduce a taxonomy to structure the growing literature, understand the ingredients of the main works, and recognize their merits and limitations.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at ACM MM 2015, based on our recent survey. Our tutorial focuses on challenges and solutions for content-based image retrieval in the context of online image sharing and tagging. We present a unified review on three closely linked problems: tag assignment, tag refinement, and tag-based image retrieval. We introduce a taxonomy to structure the growing literature, understand the ingredients of the main works, and recognize their merits and limitations.

We provided also an hands-on session with the main methods, software and datasets. All data, code and slides are online at: http://www.micc.unifi.it/tagsurvey

Our paper “A Data-Driven Approach for Tag Refinement and Localization in Web Videos”, by myself, Marco Bertini, Giuseppe Serra, Alberto Del Bimbo, has been accepted for publication in Computer Vision and Image Understanding (CVIU) and is now available online.

Our paper “A Data-Driven Approach for Tag Refinement and Localization in Web Videos”, by myself, Marco Bertini, Giuseppe Serra, Alberto Del Bimbo, has been accepted for publication in Computer Vision and Image Understanding (CVIU) and is now available online.

Alberto Del Bimbo has been also invited to present our work at the Workshop on Large-Scale Video Search and Mining at CVPR 2015.

Estimating the relevance of a specific tag with respect to the visual content of a given image and video has become the key problem in order to have reliable and objective tags. With video tag localization is also required to index and access video content properly. In this paper, we present a data-driven approach for automatic video annotation by expanding the original tags through images retrieved from photo-sharing website, like Flickr, and search engines such as Google or Bing. Compared to previous approaches that require training classifiers for each tag, our approach has few parameters and permits open vocabulary.

Last friday I visited Fei-Fei Li’s Vision Lab at Stanford University and I had the pleasure of giving a very informal talk on our ongoing works on social media annotation. The slides of the talk are available online.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

The success of media sharing and social networks has led to the availability of extremely large quantities of images that are tagged by users. The need of methods to manage efficiently and effectively the combination of media and metadata poses significant challenges. In particular, automatic image annotation of social images has become an important research topic for the multimedia community. In this paper we propose and thoroughly evaluate the use of nearest-neighbor methods for tag refinement and we report an extensive and rigorous evaluation using two standard large-scale datasets.

Our paper “Combining Generative and Discriminative Models for Classifying Social Images from 101 Object Categories” has been accepted at ICPR’12. We use a hybrid generative-discriminative approach (LDA + SVM with non-linear kernels) over several visual descriptors (SIFT, GIST, colorSIFT).

Our paper “Combining Generative and Discriminative Models for Classifying Social Images from 101 Object Categories” has been accepted at ICPR’12. We use a hybrid generative-discriminative approach (LDA + SVM with non-linear kernels) over several visual descriptors (SIFT, GIST, colorSIFT).

A major contribution of our work is also the introduction of a novel dataset, called MICC-Flickr101, based on the popular Caltech 101 and collected from Flickr. We demonstrate the effectiveness and efficiency of our method testing it on both datasets, and we evaluate the impact of combining image features and tags for object recognition.

I am co-organizing a special issue for the Computer Vision and Image Understanding journal on “Computer Vision and the Web”, together with Shih-Fu Chang (Columbia University), Gang Hua (Microsoft Research Asia), Thomas Mensink (Univ. of Amsterdam), Greg Mori (Simon Fraser Univ.) and Rahul Sukthankar (Google Research). You can see all the details on the call for papers.

I am co-organizing a special issue for the Computer Vision and Image Understanding journal on “Computer Vision and the Web”, together with Shih-Fu Chang (Columbia University), Gang Hua (Microsoft Research Asia), Thomas Mensink (Univ. of Amsterdam), Greg Mori (Simon Fraser Univ.) and Rahul Sukthankar (Google Research). You can see all the details on the call for papers.