We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.

The slides are available on this page.

Everything you wanted to know about image tagging, tag refinement and social image retrieval. Our paper has been (finally) accepted to ACM Computing Surveys! This is a titanic effort, by Xirong Li, Tiberio Uricchio, myself, Marco Bertini, Cees Snoek and Alberto Del Bimbo, to structure the growing literature in the field, understand the ingredients of the main works, clarify their connections and difference, and recognize their merits and limitations.

Everything you wanted to know about image tagging, tag refinement and social image retrieval. Our paper has been (finally) accepted to ACM Computing Surveys! This is a titanic effort, by Xirong Li, Tiberio Uricchio, myself, Marco Bertini, Cees Snoek and Alberto Del Bimbo, to structure the growing literature in the field, understand the ingredients of the main works, clarify their connections and difference, and recognize their merits and limitations.

A pre-print is available on arXiv and the source code is on GitHub.

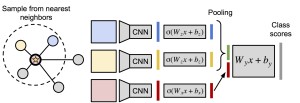

Our paper “Love Thy Neighbors: Image Annotation by Exploiting Image Metadata”, by J. Johnson*, L. Ballan* and L. Fei-Fei (* equal contribution), has been accepted to ICCV 2015. A pre-print is now available on arXiv.

Our paper “Love Thy Neighbors: Image Annotation by Exploiting Image Metadata”, by J. Johnson*, L. Ballan* and L. Fei-Fei (* equal contribution), has been accepted to ICCV 2015. A pre-print is now available on arXiv.

Some images that are difficult to recognize on their own may become more clear in the context of a neighborhood of related images with similar social-network metadata. We build on this intuition to improve multilabel image annotation. Our model uses image metadata nonparametrically to generate neighborhoods of related images using Jaccard similarities, then uses a deep neural network to blend visual information from the image and its neighbors.

Our ICMR 2014 full paper “A Cross-media Model for Automatic Image Annotation” by Lamberto Ballan, Tiberio Uricchio, Lorenzo Seidenari and Alberto Del Bimbo has been accepted for oral presentation and it is now available online.

Our ICMR 2014 full paper “A Cross-media Model for Automatic Image Annotation” by Lamberto Ballan, Tiberio Uricchio, Lorenzo Seidenari and Alberto Del Bimbo has been accepted for oral presentation and it is now available online.

Automatic image annotation is still an important open problem in multimedia and computer vision. The success of media sharing websites has led to the availability of large collections of images tagged with human-provided labels. Many approaches previously proposed in the literature do not accurately capture the intricate dependencies between image content and annotations. We propose a learning procedure based on KCCA which finds a mapping between visual and textual words by projecting them into a latent meaning space. The learned mapping is then used to annotate new images using advanced nearest-neighbor voting methods.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

Our ICME 2013 paper “An evaluation of nearest-neighbor methods for tag refinement” by Tiberio Uricchio, Lamberto Ballan, Marco Bertini and Alberto Del Bimbo is now available online.

The success of media sharing and social networks has led to the availability of extremely large quantities of images that are tagged by users. The need of methods to manage efficiently and effectively the combination of media and metadata poses significant challenges. In particular, automatic image annotation of social images has become an important research topic for the multimedia community. In this paper we propose and thoroughly evaluate the use of nearest-neighbor methods for tag refinement and we report an extensive and rigorous evaluation using two standard large-scale datasets.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.

We gave a tutorial on “Image Tag Assignment, Refinement and Retrieval” at CVPR 2016, based on our survey. The focus is on challenges and solutions for content-based image retrieval in the context of online image sharing. We present a unified review on three problems: tag assignment, refinement, and tag-based image retrieval.